One of my professional interests is in Artificial Intelligence – AI. I think I’ve had an interest in the simulation of human personality by software for as long as I’ve been interested in programming, and have also heard most of the jokes around the subject – particularly those to do with ‘making friends’. 🙂 In fiction, most artificial intelligences that are portrayed have something of an attitude problem; we’ve had HAL in 2001 – insane. The Terminator designed to be homicidal. The Cylons in the new version of Battlestar Galactica and the ‘prequel’ series, Caprica – originally designed as mechanical soldiers and then evolving in to something more human with an initial contempt for their creators. The moral of the story – and it goes all the way back to Frankenstein – is that there are indeed certain areas of computer science and technology where man is not meant to meddle.

One of my professional interests is in Artificial Intelligence – AI. I think I’ve had an interest in the simulation of human personality by software for as long as I’ve been interested in programming, and have also heard most of the jokes around the subject – particularly those to do with ‘making friends’. 🙂 In fiction, most artificial intelligences that are portrayed have something of an attitude problem; we’ve had HAL in 2001 – insane. The Terminator designed to be homicidal. The Cylons in the new version of Battlestar Galactica and the ‘prequel’ series, Caprica – originally designed as mechanical soldiers and then evolving in to something more human with an initial contempt for their creators. The moral of the story – and it goes all the way back to Frankenstein – is that there are indeed certain areas of computer science and technology where man is not meant to meddle.

Of course, we’re a long way away form creating truly artificial intelligences; those capable of original thought that transcends their programming. I recently joked that we might be on our way to having a true AI when the program tells us a joke that it has made up that is genuinely funny! I think the best we’ll manage is to come up with a clever software conjuring trick; something that by deft programming and a slight suspension of disbelief of people interacting with the software will give the appearance of an intelligence. This in itself will be quite something, and will probably serve many of the functions that we might want from an artificial intelligence – it’s certainly something I find of interest in my involvement in the field.

But the problem with technology is that there is always the possibility of something coming at us unexpectedly that catches us out; it’s often been said that the human race’s technical ability to innovate outstrips our ethical ability to come up with the moral and philosophical tools we need to help our culture deal with the technical innovations by anywhere from a decade to 50 years; in other words, we’re constantly trying to play catch up with the social, legal and ethical implications of our technological advances.

One area where I hope we can at least do a little forward thinking on the ethical front is in the field of AI; would a truly ‘intelligent’ artificial mind be granted the same rights and privileges as a human being or at the very least an animal? How would we know when we have achieved such a system, when we can’t even agree on definitions of intelligence or whether animals themselves are intelligent?

Some years ago I remember hearing a BT ‘futurist’ suggesting that it might not be more than a decade or so before it would be possible to transfer the memory of a human being in to a computer memory, and have that memory available for access. This isn’t the same as transferring the consciousness; as we have no idea what ‘conciousness’ is, it’s hard to contemplate a tool that would do such a thing. But I would accept that transferring of memories in to storage might be possible and might even have some advantages, even if there are ethical and the ultimate in privacy implications to deal with. Well, it’s certainly more than a decade ago that I heard this suggestion, and I don’t believe we’re much closer to developing such a technology, so maybe it’s harder than was thought.

But what if….

In the TV series ‘Caprica’, the artificial intelligence that controls the Cylons is provided by an online personality created by a teenage girl for use as an avatar in cyberspace that is downloaded in to a robot body. In Alexander Jablokov’s short story ‘Living Will’ a computer scientist works with a computer to develop a ‘personality’ in the computer to be a mirror image of his own, but that won’t suffer from the dementia that is starting to affect him. In each case a sentient program emerges that in all visible respects is identical to the personality of the original creator. The ‘sentient’ program thus created is a copy of the original. In both Caprica and ‘Living Will’ the software outlives it’s creator.

But what if it were possible to transfer the consciousness of a living human mind over to such a sentient program? Imagine the possibilities of creating and ‘educating’ such a piece of software to the point at which your consciousness could wear it like a glove. From being in a situation where the original mind looks on his or her copy and appreciates the difference, will it ever be possible for that conscious mind to be moved in to that copy, endowing the sentient software with the self awareness of the original mind, so that the mind is aware of it’s existence as a human mind when it is in the software?

Such electronic immortality is (I hope) likely to be science fiction for a very long time. The ethical, eschatological and moral questions of shifting consciousnesses around are legion. Multiple copies of minds? Would such a mind be aware of any loss between human brain and computer software? What happens to the soul?

It’s an interesting view of a possible future for mankind, to live forever in an electronic computer at the cost of becoming less than human? And for those of us with spiritual beliefs, it might be the last temptation of mankind, to live forever and turn one’s back on God and one’s soul.

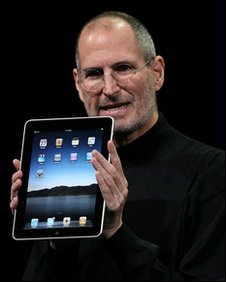

Well, the fuss over the launch of the iPad has died down somewhat – it wasn’t the Second Coming or the Rapture, the world didn’t suddenly turn Rainbow coloured (not for me. anyway) and the Apple Fans have gone quiet. So, perhaps it’s time to take a few minutes to think about what the iPad might mean in the future.

Well, the fuss over the launch of the iPad has died down somewhat – it wasn’t the Second Coming or the Rapture, the world didn’t suddenly turn Rainbow coloured (not for me. anyway) and the Apple Fans have gone quiet. So, perhaps it’s time to take a few minutes to think about what the iPad might mean in the future.  I really dislike IE6. I hate having to support it for some of my clients, and really wish they could work out how to convince their customers to upgrade. But, my clients are real world guys; they deal with nuts and bolts, ironmongery, bank accounts, etc. Their customers tend to be real world people as well – and by real world I mean not software, not media, not technology companies.

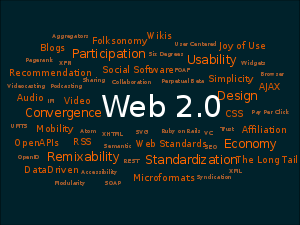

I really dislike IE6. I hate having to support it for some of my clients, and really wish they could work out how to convince their customers to upgrade. But, my clients are real world guys; they deal with nuts and bolts, ironmongery, bank accounts, etc. Their customers tend to be real world people as well – and by real world I mean not software, not media, not technology companies. I recently found this on my Twitterfeed: @jakebrewer: Yes! Note from newly devised Hippocratic oath for Gov 2.0 apps: “Don’t confuse novelty with usefulness.” It is so true – and that comes from someone who spent part of his MBA working on the management of creativity and innovation. There is a science fiction story by Arthur C Clarke in which two planetary empires are fighting a war. The story’s called ‘Superiority’ for anyone who wants to read it. In this tale, one side decides to win the war by making of use of it’s technological know-how, which is in advance of the opposing side. Unfortunately, each innovation has some unforeseen side effect which eventually, cumulatively, ends up with the technologically advanced empire innovating itself in to defeat.

I recently found this on my Twitterfeed: @jakebrewer: Yes! Note from newly devised Hippocratic oath for Gov 2.0 apps: “Don’t confuse novelty with usefulness.” It is so true – and that comes from someone who spent part of his MBA working on the management of creativity and innovation. There is a science fiction story by Arthur C Clarke in which two planetary empires are fighting a war. The story’s called ‘Superiority’ for anyone who wants to read it. In this tale, one side decides to win the war by making of use of it’s technological know-how, which is in advance of the opposing side. Unfortunately, each innovation has some unforeseen side effect which eventually, cumulatively, ends up with the technologically advanced empire innovating itself in to defeat. Well, I guess that as someone with technical credentials I should comment on the unveiling of Apple’s new tablet machine, the iPad. The first thing I will say is that I’m not an Apple fanboi, and so am probably a hard audience to impress. Anyway,

Well, I guess that as someone with technical credentials I should comment on the unveiling of Apple’s new tablet machine, the iPad. The first thing I will say is that I’m not an Apple fanboi, and so am probably a hard audience to impress. Anyway,  There is a wonderful phrase in film and TV script writing –

There is a wonderful phrase in film and TV script writing –

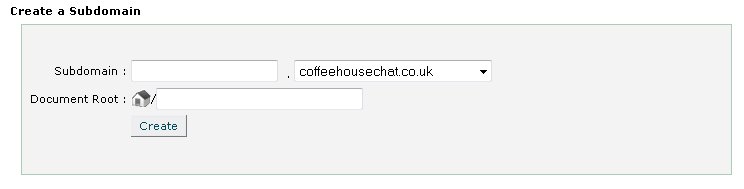

I’m currently renovating a site of mine –

I’m currently renovating a site of mine –